Envoy as Edge Proxy over Kubernetes

What is Envoy?

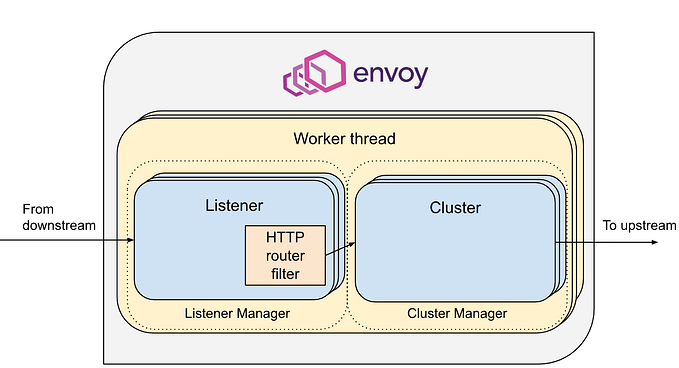

Envoy is an open source, modern and high performance proxy. It was designed for cloud native applications and for large microservice “service mesh” architectures.

In the world of envoy, the backend is called upstream and the front-end (or client) is called downstream. However, to keep things simple we’ll not use these terms in this blog.

Lets deploy

Don’t worry , I’ll keep it simple!

Deploy Environment

To simulate a kubernetes cluster we’ll use Docker Desktop with minikube:

minikube start --nodes 3 --name my-minikube-clusterAbove command will create a cluster in minikube with 3 nodes.

Backend Environment

For simulating a backend environment, we’re using 2 simple nginx deployments with services listening on port 81 and port 82 respectively. All the resources of the backend will be in namespace named “nginx-app-namespace”.

nginx-app-1.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: nginx-app-namespace

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-app-config-1

namespace: nginx-app-namespace

data:

index.html: |

App-1: Hello, I'm Ankit Kumar!

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app-deployment-1

namespace: nginx-app-namespace

spec:

# replicas: 2

selector:

matchLabels:

app: nginx-app-pod-label-1

template:

metadata:

labels:

app: nginx-app-pod-label-1

spec:

containers:

- name: nginx-app-container-1

image: nginx:latest

ports:

- containerPort: 80

lifecycle:

preStop:

exec:

command: ["/usr/sbin/nginx", "-s", "quit"]

volumeMounts:

- name: nginx-webpage-volume-1

mountPath: /usr/share/nginx/html

resources:

requests:

memory: "150Mi"

cpu: "250m"

limits:

memory: "250Mi"

cpu: "500m"

volumes:

- name: nginx-webpage-volume-1

configMap:

name: nginx-app-config-1

---

apiVersion: v1

kind: Service

metadata:

name: nginx-app-service-1

namespace: nginx-app-namespace

spec:

selector:

app: nginx-app-pod-label-1

ports:

- protocol: TCP

port: 81

targetPort: 80

type: ClusterIPnginx-app-2.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: nginx-app-namespace

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-app-config-2

namespace: nginx-app-namespace

data:

index.html: |

App-2: Hello, what's your good name?

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app-deployment-2

namespace: nginx-app-namespace

spec:

# replicas: 3

selector:

matchLabels:

app: nginx-app-pod-label-2

template:

metadata:

labels:

app: nginx-app-pod-label-2

spec:

containers:

- name: nginx-app-container-2

image: nginx:latest

ports:

- containerPort: 80

lifecycle:

preStop:

exec:

command: ["/usr/sbin/nginx", "-s", "quit"]

volumeMounts:

- name: nginx-webpage-volume-2

mountPath: /usr/share/nginx/html

resources:

requests:

memory: "150Mi"

cpu: "250m"

limits:

memory: "250Mi"

cpu: "500m"

volumes:

- name: nginx-webpage-volume-2

configMap:

name: nginx-app-config-2

---

apiVersion: v1

kind: Service

metadata:

name: nginx-app-service-2

namespace: nginx-app-namespace

spec:

selector:

app: nginx-app-pod-label-2

ports:

- protocol: TCP

port: 82

targetPort: 80

type: ClusterIPDeploy above applications:

kubectl apply -f ./nginx-app-1.yaml -f nginx-app-2.yamlkubectl get pods -n nginx-app-namespaceDeploying Envoy as a Layer 7 HTTP proxy over Kubernetes

Envoy will route and load balance traffic to the backend nginx deployments.

Note: We are currently using version 1.28.x, the latest stable release at the time of writing this blog.

envoy-deployment.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: envoy

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp

namespace: envoy

spec:

replicas: 1

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: envoy

image: envoyproxy/envoy:v1.28-latest

ports:

- containerPort: 9000

volumeMounts:

- name: envoy-config

mountPath: /etc/envoy

volumes:

- name: envoy-config

configMap:

name: envoy-configmap

---

apiVersion: v1

kind: ConfigMap

metadata:

name: envoy-configmap

namespace: envoy

data:

envoy.yaml: |

static_resources:

listeners:

- address:

socket_address:

address: 0.0.0.0

port_value: 9000

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type" : type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: http_proxy

route_config:

name: all

virtual_hosts:

- name: all_backend_cluster

domains:

- '*'

routes:

- match: { prefix: "/app1" }

route:

cluster: app1_backend_cluster

prefix_rewrite: "/"

- match: { prefix: "/app2" }

route:

cluster: app2_backend_cluster

prefix_rewrite: "/"

- match: { prefix: "/" }

route:

cluster: all_backend_cluster

prefix_rewrite: "/"

http_filters:

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

clusters:

- name: all_backend_cluster

connect_timeout: 1s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: all_backend_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: nginx-app-service-1.nginx-app-namespace.svc.cluster.local

port_value: 81

- endpoint:

address:

socket_address:

address: nginx-app-service-2.nginx-app-namespace.svc.cluster.local

port_value: 82

- name: app1_backend_cluster

connect_timeout: 1s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: app1_backend_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: nginx-app-service-1.nginx-app-namespace.svc.cluster.local

port_value: 81

- name: app2_backend_cluster

connect_timeout: 1s

type: strict_dns

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: app2_backend_cluster

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: nginx-app-service-2.nginx-app-namespace.svc.cluster.local

port_value: 82Let’s take some time to understand the configuration in `envoy.yaml`. An envoy configuration file has two main components i.e.:

- Listeners

- Clusters

Both are defined under static_resources block.

1. Listeners

Its where we define where and how envoy is to accept and filter/process the incoming traffic.

In layman’s terms, its where the envoy receives the incoming traffic from the front-end.

Some of the configuration that we do here is:

Socket address

The address and port where envoy is exposed to and receives traffic from the front-end.

Filters

They allow us to customize and manipulate the behavior of the proxy for specific types of traffic. Envoy has various builtin filters. Here we’re using one such filter for HTTP traffic. For other filters, you may check here.

Depending on the filter you’re using, there are a set of configurable parameters. In our case of HTTP filter, we’re using such parameters to configure the routing behavior to specific backend, based on path, by reading and modifying the header of incoming traffic.

In our configuration, if the traffic is coming at “/” then it’ll be routed to “all_backend_cluster” and if its coming to “/app1” then it’ll be routed to “app1_backend_cluster” and similarly for “/app2”.

Note: The filter route rules is checked/applied in order its written i.e. the rule which is written first is checked or applied first.

2. Clusters

Cluster is where we define the backend and how the traffic is distributed among them.

Some important things that we configure here are:

Connection Timeout

The maximum allowed time for Envoy to try establish a connection to the backend. If connection is not established withing this time frame, Envoy considers it as failure.

Type

The value of this field determines how Envoy discovers and manages backend hosts. In our case, we’re using strict_dns which means Envoy resolves DNS entries provided in the endpoint to IP addresses and uses them to connect to the backend host.

Load Balancing Policy

Specifies the load balancing policy for distributing traffic among upstream hosts. There are many load balancing policies provided by envoy. In our case, we’re using the standard Round-Robin.

Endpoints

It’s where we define the backend host endpoints i.e. their address and port on which they are exposed. In our case, since envoy is in the same cluster we use <service-name>.<namespace-name>.svc.cluster.local in address to point to the service of the nginx deployments.

In our config file, we have configured 3 clusters:

- all_backend_cluster

Here we have configured 2 backend endpoints i.e both the service of the 2 nginx deployments. So, traffic from any routing rule using this cluster as backend will be load balanced between the 2 services of the nginx deployments. - app1_backend_cluster

Here we have only 1 backend endpoint i.e. the service of nginx-app-2. So, traffic from any routing rule using this cluster as backend will always be routed to nginx-app-1. - app2_backend_cluster

Similar to app1_backend_cluster, here all the traffic will always be routed to nginx-app-2.

To deploy envoy use:

kubectl apply -f ./envoy-deployment.yamlThe envoy pod is configured (by above config-map) to listen on port 9000. Once the envoy pod is ready you can check by binding it with a local port:

kubectl port-forward -n envoy po/<your-envoy-port-name> 9000:9000Note: Be sure to add your pod name to above command.

Or you can directly use:

kubectl port-forward -n envoy po/$(k get po --no-headers -n envoy | awk '{ print $1 }') 9000:9000Now if you open:

http://localhost:9000in your browser, you should see below content:

and if you refresh, you should see the content of the second nginx backend:

If you open:

http://localhost:9000/app1you’ll always land to app-1:

and similarly for http://localhost:9000/app2 you’ll always land to app-2.

This was a pretty basic configuration of envoy, feel free to explore more powerful features of envoy (link to envoy’s official documentation).

I hope it helped, if yes please give it a like and share this blog among your geek friends.

Thank You!

See you soon!